LucidLink outage on April 29, 2024

May 2024

8 mins

Table of contents

Just add LucidLink

No barriers to entry. Nothing new to learn. Known and familiar user interfaces that you choose for your team.

Start your free trialIntroduction

On April 29, 2024, at 12:55 UTC, LucidLink experienced a malicious attack against our service resulting in an outage that affected all customers. Access was fully restored for everyone on May 1, 2024 at 12:06 UTC. We are extremely sorry for the disruption the outage had on our customers.

Due to the severity and sophistication of the attack, law enforcement, legal, and external cyber forensic teams have been engaged, and an investigation is underway.

This was not a ransomware attack. LucidLink has no indication that any unauthorized access to personal, corporate, or other data was leaked or otherwise affected. This is attributable to LucidLink’s zero-knowledge encryption model.

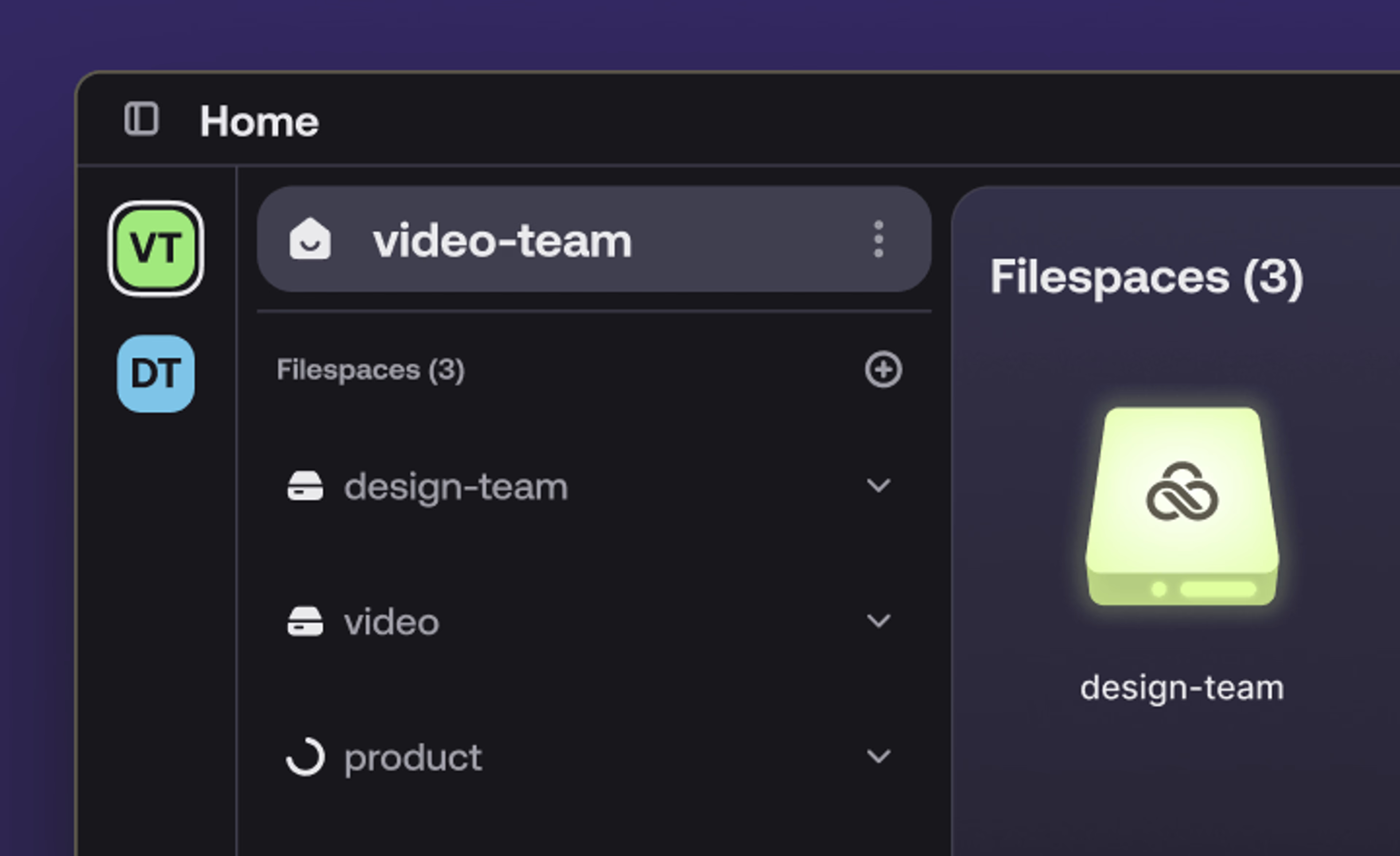

Essential LucidLink Architecture

LucidLink provides a SaaS-based storage collaboration platform that leverages cloud storage, displays it as a local drive, and allows users concurrent access to files. Customers worldwide have replaced file-sync technologies, NAS devices, and local file servers with LucidLink filespaces to improve productivity when collaborating on projects—particularly remote and hybrid teams working from distributed locations.

The LucidLink zero-knowledge service model makes it theoretically impossible for any metadata or file data to be accessed by unauthorized parties unless they hold the encryption key that is only accessible by the customer. If a customer loses their encryption key, it is not possible for LucidLink to reset it for them. This is by design and is crucial for our zero-knowledge encryption model.

There are four components to LucidLink’s architecture:

LucidLink client

Object store (cloud or on-prem)

LucidLink metadata service

LucidLink discovery service

The LucidLink client is installed on customer devices and displays the filespace as a local drive.

The object store is a container of objects, whether provided by a cloud-storage vendor or stored on-prem. The objects are encrypted pieces of files that can only be decoded through the client using the customer’s own encryption key. Due to the zero-knowledge encryption model, LucidLink does not have access to this encryption key and cannot decrypt any of the data.

The LucidLink metadata service contains the metadata for the filesystem such as file and folder names. Such identifying metadata is also encrypted and therefore unidentifiable without the client encryption key, which is only held by the customer and is not accessible by LucidLink. There is a separate metadata server for each filespace, so the impact of any disruption to one server is limited to one filespace.

The LucidLink discovery service provides clients running on customer devices the information to connect to the appropriate metadata server for the desired filespace.

The basic architecture is shown below.

Technical Overview and Incident Timeline

Initial Outage and First Response

Around 12:55 UTC on April 29, most of LucidLink metadata servers were suddenly unresponsive. Although these servers are spread across multiple time zones in various continents, they became simultaneously unresponsive, and the cause of the disruption was unknown at that time.

When a client is involuntarily disconnected from a metadata server, it attempts to reconnect to it. But first, the client needs to contact the discovery service to determine the IP address of the specific metadata server. However, when these metadata servers went down at once, all of their LucidLink clients contacted the discovery service simultaneously. The discovery service was overloaded by the flood of incoming client requests, causing client connections to fail. This is why we initially suspected a DDoS attack on the discovery service. In fact, the connection requests came from legitimate LucidLink clients, and the underlying reason was that most metadata servers were unresponsive.

We found that the disk attached to each of these servers was corrupted. It’s noteworthy that this corruption happened at about the same time across servers in multiple time zones on different continents. When we examined some of the corrupted disks, we found that large portions of the disks had been overwritten with random data.

At this point, we formed two workstreams:(i) containment and remediation and (ii) restoring service to customers.

Restoring Service to Customers

In order to restore the critical metadata service, we had to first replicate its infrastructure. This involved deploying many thousands of new metadata servers with the appropriate build image. Doing so at such a massive scale required a different approach and process. We created the new process and deployed the new infrastructure by approximately April 30, 2024, 18:00 UTC.

The next step of the recovery process was to initialize each of the newly deployed metadata servers to the last known current state for each filespace. As part of our routine disaster recovery operations, we create backup copies of every metadata server on a rolling six-hour schedule. Recovering metadata servers from backups is another standard process that is performed periodically, but as a result of the attack, we were required to build a new recovery process that could handle the scale in an urgent timeframe.

This new process was created and tested, then deployed on a smaller scale for verification. After successfully deploying 10% of the metadata servers, the process was ready to be done at scale for the remaining thousands of metadata servers. In order to expedite recovery for remaining customers, we dramatically scaled up the build infrastructure to increase the speed of deployments.

The final phase of recovering the remaining filespace metadata servers from backup was initiated at 19:30 UTC on April 30, 2024, and by 02:55 UTC on May 1, 2024 the automated process was finished. At this point, we had restored access for about 98% of our customers. Due to several factors, recovering the remaining filespaces required manual intervention. A dedicated team was deployed to address each one.

By May 1, 2024, 12:06 UTC all filespace metadata servers were back online and all customers had access to their filespaces.

Containment and Remediation

As part of LucidLink’s cyber security incident response process, our teams took a systematic approach to troubleshooting and triage, and we were able to identify, contain and remediate the source of the disruption successfully.

Although under full investigation by third-party forensic experts, we believe the root cause of the event to be malicious exploitation of an internal server with access to the production environment. This server was utilized to gain elevated privilege and to execute a script that corrupted the disk attached to each metadata server.

LucidLink promptly began its containment process by initially blocking all internet-facing traffic, restricting infrastructure access only to essential personnel, and running extensive vulnerability tests and malware scanning. The LucidLink response team quarantined all servers with elevated privileges to access the production environment and continued with extensive analysis of network, systems, EDR, VPN, access, and application logs. After credential rotations, replacing all API tokens, SSH key pairs, and local account passwords, LucidLink engaged law enforcement, as well as third party specialists for assistance. Through this process, LucidLink updated its network topology and implemented additional access restrictions to further protect its server infrastructure.

Summary

In summary, the incident resulted in the temporary loss of metadata for most filespaces until our team recovered the metadata from backup systems. File data, stored separately in object storage, remained intact, but LucidLink clients could not access that data because they needed the metadata to determine which objects to read. LucidLink has re-enabled that access.

Incident Timeline

Apr 29, 2024

12:55 UTC Approximately 5,000 filespaces became unresponsive.

13:05 UTC The support team received the first ticket regarding the incident.

17:00 UTC We notified customers that many filespaces were unresponsive.

17:16 UTC We discovered that some metadata servers had corrupted disks.

Apr 30, 2024

13:13 UTC We developed a program to automatically recover metadata servers from backups.

15:00 UTC We scaled the discovery service horizontally to run on multiple instances in order to accommodate the surge in traffic.

18:00 UTC We completed the provisioning of new metadata servers.

19:30 UTC The first affected filespace was restored.

May 1, 2024

00:10 UTC We started the automated restoration of filespaces.

02:55 UTC The automated process restored 98% of affected filespaces.

10:00 UTC We started manual restoration of the remaining 2% of affected filespaces.

12:06 UTC All affected filespaces were back online.

Additional Prevention and Next Steps

We take the availability of our services extremely seriously. As a result of this outage, we are continuing our efforts and have ongoing measures to fortify our services further against future such attacks:

Continued work with law enforcement: we have notified the Federal Bureau of Investigation’s Internet Crime Complaint Center and are seeking their assistance.

Continued work with third party forensic specialists: upon service restoration, we have continued with a detailed examination and testing on the network, endpoints, and systems to both (i) help with root cause investigation and (ii) provide additional threat hunting capabilities to help validate the continued integrity of our environment. We are confident this course of action will further enhance our already robust existing controls.

Additional security measures: like any Saas platform in a constantly evolving universe of third-party threats and malicious attacks, there are always opportunities for security improvements in our product and environment. These efforts involve not only the deployment of even more robust credential management but also additional access controls and more comprehensive vulnerability scanning.

Strengthen our security infrastructure: (i) dedicate additional engineering resources on security-related projects; and (ii) scale our current organization and capabilities by implementing a security operations center for enhanced threat monitoring.

Conclusion

While we are proud that our zero-knowledge encryption model performed as intended and we have no indication that our customer data was compromised as a result of the temporary outage, the entire company has learned and grown from this experience. As a company made of both enterprise storage technologists and former content creators, our superpower has always been understanding the workflows and experiences of content creators. Due to this, we deeply felt the pain of our customers’ downtime, anxiety, and frustration.

We remain:

Committed to ensuring that the security for our infrastructure remains appropriate and limiting the potential that another outage like this is experienced again.

Devoted to our customers and continuing with your trust in the system that has been a part of your everyday workflows.

Intent on providing ongoing and transparent communication regarding relevant findings and outage resolution.

Dedicated to supporting the talented creatives that have been in our DNA since the beginning.

Keep reading

How LucidLink + AWS sets the gold standard for enterprise cloud storage collaboration

See how LucidLink and AWS combine to deliver a trusted, high-performance storage collaboration platform with predictable, egress-free pricing.

15 April 2025, 5 mins read

Make every second count: what’s new with LucidLink at NAB 2025

Explore LucidLink’s latest product release for NAB 2025 — with enterprise-ready features, mobile tools and real-time access from anywhere.

03 April 2025, 5 mins read

What is LucidLink and how does it work?

Learn all about LucidLink, the storage collaboration platform that gives teams instant, secure access to files from anywhere.

27 March 2025, 8 mins read

Join our newsletter

Get all our latest news and creative tips

Want the details? Read our Privacy Policy. Not loving our emails?

Unsubscribe anytime or drop us a note at support@lucidlink.com.