AI in Media and Entertainment: how to collaborate with AI effectively

April 2024

11 mins

Table of contents

- 5 ways to use AI in Media and Entertainment

- Benefits of using AI in Media and Entertainment

- Enhancing creativity with generative AI

- Automating media workflows with AI

- Personalizing content with AI

- Ethical best practices for AI in media

- Future AI trends in Media and Entertainment

- Prepare for the AI future with LucidLink

Just add LucidLink

No barriers to entry. Nothing new to learn. Known and familiar user interfaces that you choose for your team.

Start your free trialThe technologies behind artificial intelligence (AI) have been around in various capacities for years, whether through algorithmic recommendations on platforms like Spotify and Netflix or auto-tagging a company’s photo library via object detection models. But in the past year, generative AI in chatbots like ChatGPT and image generators like Midjourney have led to an explosion of interest and optimism in the space. Statista predicts the AI industry will grow twentyfold by 2030, up to two trillion dollars. The field is rapidly developing and attracting regulators’ attention on both sides of the pond, most recently demonstrated by an executive order from President Biden.

AI has the potential to transform pretty much every industry, from law to agriculture to defense, in a way not seen since the advent of the personal computer. In the media and entertainment sector, it has already been the cause of disruption. Its usage was a key sticking point in 2023 contract negotiations by the Screen Actors Guild and the Writers Guild of America, as rank-and-file actors fought against signing over their likenesses to be generated in perpetuity and writers fought against the outsourcing of their labor to AI.

However, usage of AI in Media and Entertainment (M&E) doesn’t need to be controversial. It’s already being used in many applications, and holds exciting possibilities to streamline workflows, boost creativity, and inspire new types of art. In this article, we’ll explore some of those use cases and outline ways creatives can wield these technologies to their own benefit.

5 ways to use AI in Media and Entertainment

The Media and Entertainment sector has long been a pioneer in the use of technology, employing algorithms to generate eye-popping special effects in movies as old as Westworld (1973) and Tron (1982). More recently, algorithms have been used to generate entire worlds in games like No Man’s Sky. Algorithms also order our social media feeds, defining not only the public’s experience of the internet but also the ways media organizations frame and distribute their content.

The rise of AI is no different. As the technology continues to mature, creatives in the M&E sector should look to leverage AI in five key ways:

Enhancing creativity with generative AI

Automating workflows with AI helpers

Personalizing content to delight their audience

Advocating ethical use cases for AI

Innovating future applications of the technology

We’ll discuss all of these in more depth below. But first, let’s take a quick, high-level look at why we should be using these technologies in the first place.

Benefits of using AI in Media and Entertainment

One thing everyone seems to agree on about AI is that it’s going to change things pretty dramatically. Some of the benefits it can bring to the media and entertainment sector in particular include:

Better content recommendations: More sophisticated recommendation engines can help people find new favorite streamers, bands, TV shows, news outlets, and more. For creatives, this can lead to niche content finding its ideal audience.

Audience insights: AI can analyze viewer data to help better understand what audiences are looking for. For creatives, imagine using more detailed strategic insights as a prompt for brainstorming.

Advertising and monetization: Targeted advertising opens up possibilities for agency creatives, and can allow for less disruptive paths toward monetization.

Content moderation: User-generated content can be labor-intensive and difficult to moderate manually, but AI can proactively monitor and filter harmful content. This leads to a better user experience on social platforms, for example.

Predictive analytics: Audience behaviors over a large scale can help lead to a better understanding of changing preferences, leading to better forecasting and content acquisition strategies. Creatives can think of this as fuel for richer strategic prompts.

Cost reductions: There’s no doubt that streamlining content production and receiving fully automated AI content can make it easier to create professional-looking content faster and cheaper. The challenge lies in doing so ethically and in a way that prioritizes the audience’s enjoyment.

Now that we’ve covered the why, let’s dig more into the how.

Enhancing creativity with generative AI

When we talk about AI these days, generative AI gets much of the focus, and for good reason: It’s impressive! Generative AI typically works by feeding many examples of a given type of content into a model that learns to make associations between them and spot patterns. Users can then have the AI make original creations based off of those associations. A model trained on numerous examples of fine art can create a painting of a Lamborghini in Van Gogh’s distinct style, assuming a user first requested such a thing.

Some of the types of media that AI can currently create include:

Writing: Tools like GPT-3.5 and GPT-4 by OpenAI can create outlines, scripts, ideas, and articles.

Artwork: Tools like Dall-E, Midjourney, and Adobe Firefly can create realistic images and professional-looking illustrations off of seemingly any prompt you feed it.

Music: OpenAI’s Jukebox can create snippets of music in a variety of genres and styles, including rudimentary singing.

Speech: Google’s DeepMind WaveNet is one of the more popular tools for creating realistic-sounding human speech. With sufficient samples, it can reproduce the voices of specific people.

Video: Runway ML can generate short video clips based on text input, whether creating a net-new creative work or working from a base sample. New tools in development at companies like Meta and Adobe look to make AI-generated videos as seamless as ChatGPT is for written content.

People working in creative disciplines sometimes look at technologies like the above and see them as a threat. But they can also just as easily be used to enhance creativity. Think of AI the way Steve Jobs thought of the computer: as a “bicycle for our minds,” a tool humans created to perform tasks better than we would’ve been able to otherwise.

Here are a few of ways AI can help the creative process:

Handling procedural writing, like sports recaps, financial reports, and weather forecasts. This then frees journalists up for more in-person interviewing and investigative writing.

Spurring creativity, generating germs of ideas quickly for human creatives to refine. Imagine feeding an art prompt into MidJourney along with a handful of descriptors and using that as the first step of the creative process, or asking ChatGPT to give you 10 ideas matching a creative brief.

Professionalizing small operations. Teams who are bootstrapping their vision can outsource some creativity to AI while they focus more on core competencies.

Leading to more interactive forms of entertainment, including chatbots with fictional characters as well as custom, user-generated content.

Helping with research. Asking AI extremely specific questions — “what are some color schemes that may be associated with the Victorian era?” — can kickstart the research process. In this way, it functions similar to search — as a starting point for deeper dives.

Helping users stream video at a higher resolution with encoding, and boosting framerates in games with AI image generation, giving audiences a richer, more immersive visual experience.

Automating media workflows with AI

As fun as the creative process is, some aspects of it can just be boring. AI is particularly good at speeding up some of the more tedious and time-consuming aspects of content creation across media and entertainment workflows. Let’s take a look at some of the ways in which AI can help creative teams speed through the boring stuff so they can focus on the more interesting parts of the workflow.

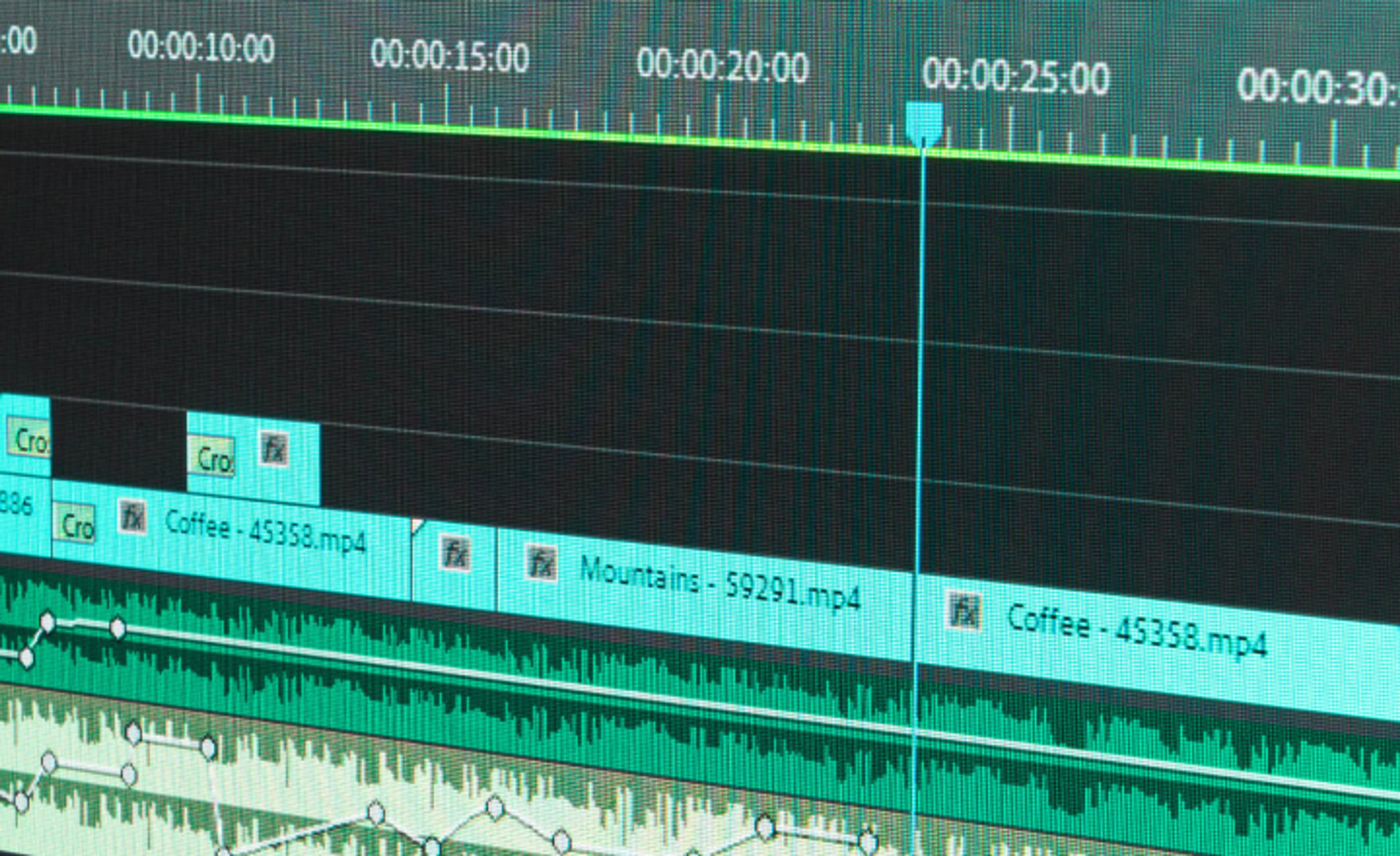

Color correction: DaVinci Resolve and Adobe Premiere Pro both utilize machine learning to assist in color matching and color correction across multiple shots.

Masking: Features like DaVinci Resolve’s Magic Mask leverage neural processing to automate motion-tracked masks. They save hours of work by automatically isolating specific parts of videos in order to then apply effects or other enhancements like hiding objects or changing a background.

Audio syncing: Adobe Premiere Pro and Final Cut Pro X can automatically sync audio and video tracks, minimizing the need for painstaking timecode management.

Metadata tagging: AI tools like Adobe Sensei and Clarifai can automatically tag images and videos with metadata, which is a laborious process in and of itself. GrayMeta and Microsoft Azure, meanwhile, can extract insights from videos and automate their categorization. All of these tagging tools lead to faster and more comprehensive searching across large data sets, like sports clips or news broadcasts.

Subtitling: Descript and Google Cloud can automatically convert audio to text, speeding up the subtitle process and adding to the rich pool of AI-powered metadata.

Localization: Synthesia can generate videos of AI avatars delivering any given line in any given language with lifelike lip-syncing and facial expressions. HeyGen does this as well but can also localize any existing video by replicating a speaker’s original words, tone, and expressions in other languages.

Video editing tools: Adobe Premiere Pro and After Effects, meanwhile, can use AI to remove objects and help determine spots for cuts.

All of these tools help speed up pre-existing creative workflows in the media industry, cutting out time-consuming processes and allowing creatives to devote more attention to deeper creative efforts.

Personalizing content with AI

One futuristic dream people occasionally float about AI is the idea of completely, 100% personalized content: an AI learns your exact favorite medium and artists within it and usage patterns and generates an entertainment specifically for you. This seems as interesting as it does a little deflating, particularly for people who like to be surprised or challenged by the culture they consume.

Perhaps more interesting is the ability of AI to not generate completely custom-built content for each user but to personalize it toward them. For example, recommendation algorithms — like those on TikTok, Spotify, or Netflix — are getting increasingly better at solving the problem of “decision fatigue” for users, proactively suggesting things they may like. Additionally, B2B tools like Tavus, Maverick, and bHUman can be used to make customized videos for sales, marketing, and customer service purposes.

Surely, personalization and repurposing of data raise some privacy concerns, so it’s worth diving into how we can remain ethical while exploring these exciting technologies.

Ethical best practices for AI in media

With a new technology as powerful as AI, ethical concerns are top of mind. Some of the earliest use-cases of technology like deepfakes were non consensual, for example, and many writers and news platforms are working to protect their work from being scraped for use in large language models. For these reasons, it’s important to keep up with ethical best practices in AI. The technology will only be used more and more widely, so these are likely to evolve, but a few principles to keep in mind are:

Be transparent

For now, it’s best to label AI-generated work, particularly if it’s for a client.

Use diverse datasets

Many current AI models reflect the biases of the media that informed them, reinforcing pre-existing social stereotypes. For this reason, models should be trained on diverse datasets and even have their outputs corrected to feature more diverse output.

Protect IP

Don’t use Large Language Models (LLMs) drawn from work that hasn’t been explicitly licensed for these uses. For example, Getty created a custom AI tool trained on its library, meaning any output from that tool is built on images licensed for AI training.

Promote human-AI collaboration

Look for places to streamline and enhance workflows through AI, as well as creating new types of human art, rather than to automate the process of creativity. (Audiences respond better to a human touch, too.)

Respect privacy

Use any AI-powered analytics about consumption patterns responsibly, ensuring that all user data is anonymized and properly encrypted.

Keep up with AI ethics

Stay abreast of developments relative to this exact topic. Perhaps transparency becomes less important as the technology becomes widespread but the choice of model carries larger ethical weight. Like the technology itself, these conversations will move quickly.

Future AI trends in Media and Entertainment

While the ethical guidelines above are the most important pointer in this article, staying forward-focused about AI use cases is probably the most fun. A lot of this seems like science fiction. That’s part of the reason to keep a toe in this space: for all its disruptive capability, AI’s powers are legitimately transformative, and the creative teams who wield it will not only have a competitive advantage but a creative one.

Likely future AI use cases include:

AI-powered virtual reality (VR) and augmented reality (AR): Extrapolate AI’s ability to generate realistic images and short videos to an ability to generate a world experienced via VR headset. Designing those worlds is, at present, highly resource-intensive, but fast-moving development workflows could create custom environments (and narratives) to explore.

Convergence between gaming, film & VR: More complex non-player characters in games could take the form of highly interactive companions like those in the movie Her. Creatives could design these companions and narratives that users undergo alongside them.

More useful real-time collaboration: For creatives, this could be a dream writing partner to bounce jokes and wild ideas off of. For technical workers, this could be an AI co-editor who could take notes on the rhythm of a scene in progress.

A post-search internet: Since its inception, search has been one of the building blocks of the internet. If it’s usurped by chatbots, which can generate custom answers on command for users, it will alter how people use and interact with the internet. Whether that looks like a metaverse or something else entirely remains to be seen.

Prepare for the AI future with LucidLink

Ultimately, no one can really predict what far-flung dreams AI can help make real. But one thing is certain: vastly more content will be generated. LucidLink can seamlessly embed AI-generated content in media workflows.

It features best-in-class security and encrypted storage, helping creative teams comply with AI best practices. It facilitates instantaneous collaboration and sharing regardless of where creative teams are located, working to supercharge creative workflows just like AI does. Exploring how LucidLink can help your creative teams and try for free.

Keep reading

Video editing workflow: a guide for collaborative teams

Discover how to create a collaborative video editing workflow. Get tips on how to turn your editing process into a seamless, team-based experience.

05 June 2025, 11 mins read

Best marketing collaboration software: 11 tools for distributed teams

Discover the 11 best marketing collaboration tools for distributed teams in 2025 — from project management to real-time file sharing.

03 June 2025, 10 mins read

Creative operations: how to streamline creative workflows

Learn what creative operations is, how it differs from project management and how to optimize your creative workflows for smoother, faster collaboration.

28 May 2025, 8 mins read

Join our newsletter

Get all our latest news and creative tips

Want the details? Read our Privacy Policy. Not loving our emails?

Unsubscribe anytime or drop us a note at support@lucidlink.com.